ZF recently presented its Level 4 autonomous shuttle. Could you tell us a little more about that project and the next steps?

As previously announced ZF is now a full-service autonomous transportation system provider offering not only autonomous shuttles but also project planning, implementation, operation, and maintenance/service of autonomous passenger transportation systems. We have carried out recent demonstrations of ZF autonomous transportation system shuttles in the UK and the US and of course operate current autonomous shuttles from our wholly-owned 2getthere business, where we have shuttles that have been running commercially in places like Abu Dhabi and Rotterdam for decades. We are also currently launching the RaBUS project, deploying and researching electric bus shuttles up to autonomous level 4. This will take place in Germany in Mannheim (focus: mixed inner-city traffic) and Friedrichshafen (focus: overland operation) with a local public transport operation with electrified and automated vehicles, among other things with attractive travel speeds, and is to be tested by the end of 2023.

What were the special considerations to take into account with regards to the cameras and sensors?

Of course, full 360-degree coverage of the environment is a must for operating up to Level 4. We employ a comprehensive ZF sensor suite including multiple cameras, radars and LiDARS to achieve this, and sensor placement on the vehicle is also critical to ensure the best field of view of the surrounding environment and other road users. This expansive sensor set generates large amounts of real-time data, so ZF also employs its ProAI high-performance computing platform that is among the highest echelons of such devices in the mobility industry to process this data and manage the operation of the vehicle.

Autonomous shuttles appear to be a serene way of getting around but there are hurdles still to cross. What’s your vision of AV shuttles 10 years from now?

Starting from today’s relatively limited applications in restricted access areas like airports and university campuses we see significant expansions into first / last-mile fixed-route transport applications, especially those that require close to 24/7 operation so have the most attractive business cases for operators, and ultimately to mixed-traffic inner-city applications and linking cities to rural areas to help reduce traffic flow and provide a safe and comfortable ride to work and other activities.

“AV Shuttles will be an integrated part of a digital mobility ecosystem in which passengers book their multi-modal trips via smartphones.”

Mobility will be greener, safer and more efficient than today. AV shuttles will be a key enabler to transform the current public transport system, in particular in times when we face shortages of bus drivers.

The implementation of AV shuttles requires close collaboration with regional/city planners to plan routes and discuss the infrastructure and other requirements and then design the right Autonomous Transport System for their needs, but in ten years we can envisage AV shuttles being a much more common feature in the local transportation system, for both people and goods movements.

We hear that some companies are investigating or proposing some level of autonomy for cars without using LiDAR. Do you think LiDAR is mandatory for autonomous driving?

Our DNA is around safety and our goal is to deliver the best safety solutions we possibly can; we believe a sensor system based on multiple sensing technologies provides the most robust detection of a vehicle’s environment, as each sensor technology has its own strengths – e.g. radar and lidar can precisely measure distances and relative closing speeds to fixed and moving objects, while cameras are good for object recognition and classification – and these capabilities are complementary. LiDAR can help with this and can be critical in edge cases as it delivers more precise measurements than current camera or radar technology – so when seeking to deliver a very safe high-level autonomous vehicle, LiDAR has benefits. But LiDAR is not a prerequisite for all AD functions and levels of autonomy, and in the near term is a costly technology (although the industry is working hard to lower the cost of LiDAR) so OEs will do their cost-benefit analysis. We believe LiDAR will improve the autonomous vehicle and does offer benefits; the market will not likely be as large as camera and radar, and not all OEMs will go down that path, but there will certainly be a market for LiDAR.

There is a lot of hype around Vehicle-to-Vehicle (V2V) and Vehicle-to-Everything (V2X) communication and the possibilities for safer roadways and more efficient travel. What’s your view on the realistic possibilities?

Early forms of vehicle to infrastructure communication such as the European eCall emergency crash notification system have successfully been introduced into the market and vehicle connectivity for infotainment systems is commonplace, but the evolution of V2X for safety applications, in general, seems to have moved rather slowly. There are significant investment challenges for the infrastructure and for adding the V2x transmitter/receiver in vehicles, and in having a dedicated band or way to make these communications discrete and effective; we have seen in the USA for example the battle over the 5.9GHz V2X band due to lack of early implementation by the automotive industry. There are areas of instrumented and defined lane roadways with Japan leading the way, but there still seems to be a long way to go and with competing challenges like vehicle electrification infrastructure and a stronger focus on the fitment of ADAS systems like AEB in the near term, it may be a matter of priorities as to what technologies are successfully implemented.

There appears to be more focus than ever on developing electric powertrain components. In terms of electrification, has the auto industry reached a turning point?

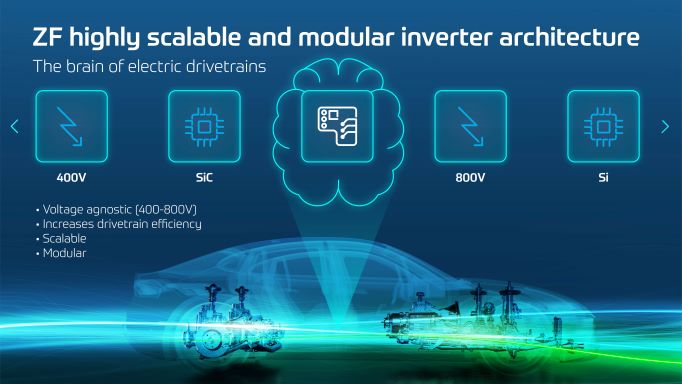

The global governmental actions toward lowering C02 emissions through internal combustion engine bans and ongoing cost optimization of electrified powertrain systems by the industry look to be bringing that tipping point closer and closer, and the many OEMs that have made announcements of their plans to move to full electric in the 2030-35 timeframe or in some cases sooner are evidence of that. For ZF’s part, we are moving full speed ahead on the development of electric powertrains and its key components – the inverter, e-motor and gear conversion technology. We have longstanding expertise in motor and gear development and have also been involved in inverter technology – the real brain and differentiator of electric drives – for a number of years now. We have developed a scalable and modular e-motor inverter architecture that allows OEMs maximum flexibility from 400V to 800V and from Silicon-to-Silicon Carbide,and are combining advanced inverter design, next-generation power semiconductors and highly intelligent software algorithms. This will enable ZF to leverage its deep experience in electric drives to increase efficiency, peak power and shorten development cycles.

In addition, during the transition to an all-electric future ZF is providing its industry-leading transmissions with integrated electric motors to support hybrid and plug-in hybrid vehicle applications, to further reduce the emissions of ICE vehicles.

Driver monitoring for fatigue and distraction has become a major focus of automotive safety regulators and governments worldwide. This trend looks set to continue in SAE level 2 (partial) and 3 (conditional) semi-autonomous vehicles. To what extent are regulations driving increasing demand for driver monitoring systems (Euro NCAP, EU GSR, SAFE act of 2020)?

Considering that human error has been estimated to cause more than 90 percent of auto accidents it’s no surprise that active driver monitoring is becoming a focal point in detecting drowsiness, distraction and other indicators of inattention, and the advent of smartphones and other portable electronic devices are a further complicating factor. EuroNCAP has taken an early lead in promoting the fitment of camera-based direct driver monitoring systems from 2023, and the European General Safety Regulation requires fitment on all new vehicles sold in Europe from 2026, which will drive fitment to 100% there. Driver monitoring system regulations have also been proposed for the USA market. More broadly, a number of crashes of Level 2 automated vehicles in recent years have led to calls from safety and consumer organizations that direct driver monitoring systems should be a compulsory part of Level 2 and Level 3 automated driving systems, to ensure that the driver is either actively monitoring the automated driving system and environment (Level 2 systems) or capable of retaking control of the vehicle when required (Level 3 systems).

Consumer technologies such as fitness trackers have been popular for some time, monitoring our heart rates, performance and sleep, but how might the renewed focus on wellness translate to the automotive space?

ZF continuously investigates ways that can help to monitor the health and wellbeing of vehicle passengers and driver engagement levels. One approach utilizes sensors directly integrated into the vehicle HMI (e.g. the steering wheel) to measure vital health statistics like heart rate and heart rate variability. Beyond direct sensing of the hands on a steering wheel, camera-based interior monitoring of eye gaze allows ZF to determine different states of driver awareness.

In future, camera- or radar-based occupant monitoring systems will be able to indirectly monitor the health and attention level of the driver and also other vehicle occupants. The detection of micromovements of the body and evaluating the overall body pose offers valuable insights to determine the in-cabin situation and enable adaptive safety strategies. Another interesting application field for such sensors is that autonomously driven passengers may be more prone to motion sickness, and its detection may lead to countermeasures by the vehicle controllers. These are just a few examples of potential in-cabin health vital sensing and we expect to see this expand in the future.